mirror of

https://github.com/mendableai/firecrawl.git

synced 2024-11-16 11:42:24 +08:00

Merge branch 'main' into feat/actions

This commit is contained in:

commit

0690cfeaad

5

.vscode/settings.json

vendored

Normal file

5

.vscode/settings.json

vendored

Normal file

|

|

@ -0,0 +1,5 @@

|

||||||

|

{

|

||||||

|

"rust-analyzer.linkedProjects": [

|

||||||

|

"apps/rust-sdk/Cargo.toml"

|

||||||

|

]

|

||||||

|

}

|

||||||

|

|

@ -103,7 +103,7 @@ This should return the response Hello, world!

|

||||||

If you’d like to test the crawl endpoint, you can run this

|

If you’d like to test the crawl endpoint, you can run this

|

||||||

|

|

||||||

```curl

|

```curl

|

||||||

curl -X POST http://localhost:3002/v0/crawl \

|

curl -X POST http://localhost:3002/v1/crawl \

|

||||||

-H 'Content-Type: application/json' \

|

-H 'Content-Type: application/json' \

|

||||||

-d '{

|

-d '{

|

||||||

"url": "https://mendable.ai"

|

"url": "https://mendable.ai"

|

||||||

|

|

|

||||||

30

README.md

30

README.md

|

|

@ -34,9 +34,9 @@

|

||||||

|

|

||||||

# 🔥 Firecrawl

|

# 🔥 Firecrawl

|

||||||

|

|

||||||

Crawl and convert any website into LLM-ready markdown or structured data. Built by [Mendable.ai](https://mendable.ai?ref=gfirecrawl) and the Firecrawl community. Includes powerful scraping, crawling and data extraction capabilities.

|

Empower your AI apps with clean data from any website. Featuring advanced scraping, crawling, and data extraction capabilities.

|

||||||

|

|

||||||

_This repository is in its early development stages. We are still merging custom modules in the mono repo. It's not completely yet ready for full self-host deployment, but you can already run it locally._

|

_This repository is in development, and we’re still integrating custom modules into the mono repo. It's not fully ready for self-hosted deployment yet, but you can run it locally._

|

||||||

|

|

||||||

## What is Firecrawl?

|

## What is Firecrawl?

|

||||||

|

|

||||||

|

|

@ -52,9 +52,12 @@ _Pst. hey, you, join our stargazers :)_

|

||||||

|

|

||||||

We provide an easy to use API with our hosted version. You can find the playground and documentation [here](https://firecrawl.dev/playground). You can also self host the backend if you'd like.

|

We provide an easy to use API with our hosted version. You can find the playground and documentation [here](https://firecrawl.dev/playground). You can also self host the backend if you'd like.

|

||||||

|

|

||||||

- [x] [API](https://firecrawl.dev/playground)

|

Check out the following resources to get started:

|

||||||

- [x] [Python SDK](https://github.com/mendableai/firecrawl/tree/main/apps/python-sdk)

|

- [x] [API](https://docs.firecrawl.dev/api-reference/introduction)

|

||||||

- [x] [Node SDK](https://github.com/mendableai/firecrawl/tree/main/apps/js-sdk)

|

- [x] [Python SDK](https://docs.firecrawl.dev/sdks/python)

|

||||||

|

- [x] [Node SDK](https://docs.firecrawl.dev/sdks/node)

|

||||||

|

- [x] [Go SDK](https://docs.firecrawl.dev/sdks/go)

|

||||||

|

- [x] [Rust SDK](https://docs.firecrawl.dev/sdks/rust)

|

||||||

- [x] [Langchain Integration 🦜🔗](https://python.langchain.com/docs/integrations/document_loaders/firecrawl/)

|

- [x] [Langchain Integration 🦜🔗](https://python.langchain.com/docs/integrations/document_loaders/firecrawl/)

|

||||||

- [x] [Langchain JS Integration 🦜🔗](https://js.langchain.com/docs/integrations/document_loaders/web_loaders/firecrawl)

|

- [x] [Langchain JS Integration 🦜🔗](https://js.langchain.com/docs/integrations/document_loaders/web_loaders/firecrawl)

|

||||||

- [x] [Llama Index Integration 🦙](https://docs.llamaindex.ai/en/latest/examples/data_connectors/WebPageDemo/#using-firecrawl-reader)

|

- [x] [Llama Index Integration 🦙](https://docs.llamaindex.ai/en/latest/examples/data_connectors/WebPageDemo/#using-firecrawl-reader)

|

||||||

|

|

@ -62,8 +65,12 @@ We provide an easy to use API with our hosted version. You can find the playgrou

|

||||||

- [x] [Langflow Integration](https://docs.langflow.org/)

|

- [x] [Langflow Integration](https://docs.langflow.org/)

|

||||||

- [x] [Crew.ai Integration](https://docs.crewai.com/)

|

- [x] [Crew.ai Integration](https://docs.crewai.com/)

|

||||||

- [x] [Flowise AI Integration](https://docs.flowiseai.com/integrations/langchain/document-loaders/firecrawl)

|

- [x] [Flowise AI Integration](https://docs.flowiseai.com/integrations/langchain/document-loaders/firecrawl)

|

||||||

|

- [x] [Composio Integration](https://composio.dev/tools/firecrawl/all)

|

||||||

- [x] [PraisonAI Integration](https://docs.praison.ai/firecrawl/)

|

- [x] [PraisonAI Integration](https://docs.praison.ai/firecrawl/)

|

||||||

- [x] [Zapier Integration](https://zapier.com/apps/firecrawl/integrations)

|

- [x] [Zapier Integration](https://zapier.com/apps/firecrawl/integrations)

|

||||||

|

- [x] [Cargo Integration](https://docs.getcargo.io/integration/firecrawl)

|

||||||

|

- [x] [Pipedream Integration](https://pipedream.com/apps/firecrawl/)

|

||||||

|

- [x] [Pabbly Connect Integration](https://www.pabbly.com/connect/integrations/firecrawl/)

|

||||||

- [ ] Want an SDK or Integration? Let us know by opening an issue.

|

- [ ] Want an SDK or Integration? Let us know by opening an issue.

|

||||||

|

|

||||||

To run locally, refer to guide [here](https://github.com/mendableai/firecrawl/blob/main/CONTRIBUTING.md).

|

To run locally, refer to guide [here](https://github.com/mendableai/firecrawl/blob/main/CONTRIBUTING.md).

|

||||||

|

|

@ -487,9 +494,20 @@ const scrapeResult = await app.scrapeUrl("https://news.ycombinator.com", {

|

||||||

console.log(scrapeResult.data["llm_extraction"]);

|

console.log(scrapeResult.data["llm_extraction"]);

|

||||||

```

|

```

|

||||||

|

|

||||||

|

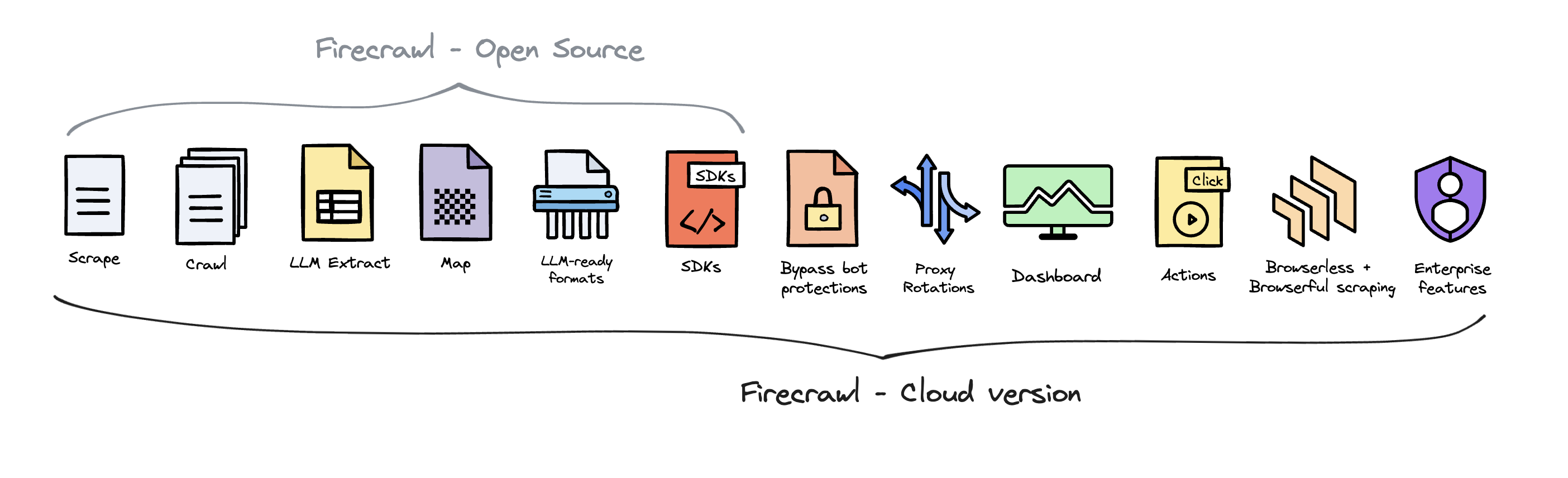

## Open Source vs Cloud Offering

|

||||||

|

|

||||||

|

Firecrawl is open source available under the AGPL-3.0 license.

|

||||||

|

|

||||||

|

To deliver the best possible product, we offer a hosted version of Firecrawl alongside our open-source offering. The cloud solution allows us to continuously innovate and maintain a high-quality, sustainable service for all users.

|

||||||

|

|

||||||

|

Firecrawl Cloud is available at [firecrawl.dev](https://firecrawl.dev) and offers a range of features that are not available in the open source version:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Contributing

|

## Contributing

|

||||||

|

|

||||||

We love contributions! Please read our [contributing guide](CONTRIBUTING.md) before submitting a pull request.

|

We love contributions! Please read our [contributing guide](CONTRIBUTING.md) before submitting a pull request. If you'd like to self-host, refer to the [self-hosting guide](SELF_HOST.md).

|

||||||

|

|

||||||

_It is the sole responsibility of the end users to respect websites' policies when scraping, searching and crawling with Firecrawl. Users are advised to adhere to the applicable privacy policies and terms of use of the websites prior to initiating any scraping activities. By default, Firecrawl respects the directives specified in the websites' robots.txt files when crawling. By utilizing Firecrawl, you expressly agree to comply with these conditions._

|

_It is the sole responsibility of the end users to respect websites' policies when scraping, searching and crawling with Firecrawl. Users are advised to adhere to the applicable privacy policies and terms of use of the websites prior to initiating any scraping activities. By default, Firecrawl respects the directives specified in the websites' robots.txt files when crawling. By utilizing Firecrawl, you expressly agree to comply with these conditions._

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -176,4 +176,4 @@ By addressing these common issues, you can ensure a smoother setup and operation

|

||||||

|

|

||||||

## Install Firecrawl on a Kubernetes Cluster (Simple Version)

|

## Install Firecrawl on a Kubernetes Cluster (Simple Version)

|

||||||

|

|

||||||

Read the [examples/kubernetes-cluster-install/README.md](https://github.com/mendableai/firecrawl/blob/main/examples/kubernetes-cluster-install/README.md) for instructions on how to install Firecrawl on a Kubernetes Cluster.

|

Read the [examples/kubernetes/cluster-install/README.md](https://github.com/mendableai/firecrawl/blob/main/examples/kubernetes/cluster-install/README.md) for instructions on how to install Firecrawl on a Kubernetes Cluster.

|

||||||

|

|

@ -19,8 +19,15 @@ import { billTeam } from "../../services/billing/credit_billing";

|

||||||

import { logJob } from "../../services/logging/log_job";

|

import { logJob } from "../../services/logging/log_job";

|

||||||

import { performCosineSimilarity } from "../../lib/map-cosine";

|

import { performCosineSimilarity } from "../../lib/map-cosine";

|

||||||

import { Logger } from "../../lib/logger";

|

import { Logger } from "../../lib/logger";

|

||||||

|

import Redis from "ioredis";

|

||||||

|

|

||||||

configDotenv();

|

configDotenv();

|

||||||

|

const redis = new Redis(process.env.REDIS_URL);

|

||||||

|

|

||||||

|

// Max Links that /map can return

|

||||||

|

const MAX_MAP_LIMIT = 5000;

|

||||||

|

// Max Links that "Smart /map" can return

|

||||||

|

const MAX_FIRE_ENGINE_RESULTS = 1000;

|

||||||

|

|

||||||

export async function mapController(

|

export async function mapController(

|

||||||

req: RequestWithAuth<{}, MapResponse, MapRequest>,

|

req: RequestWithAuth<{}, MapResponse, MapRequest>,

|

||||||

|

|

@ -30,8 +37,7 @@ export async function mapController(

|

||||||

|

|

||||||

req.body = mapRequestSchema.parse(req.body);

|

req.body = mapRequestSchema.parse(req.body);

|

||||||

|

|

||||||

|

const limit: number = req.body.limit ?? MAX_MAP_LIMIT;

|

||||||

const limit : number = req.body.limit ?? 5000;

|

|

||||||

|

|

||||||

const id = uuidv4();

|

const id = uuidv4();

|

||||||

let links: string[] = [req.body.url];

|

let links: string[] = [req.body.url];

|

||||||

|

|

@ -47,24 +53,61 @@ export async function mapController(

|

||||||

|

|

||||||

const crawler = crawlToCrawler(id, sc);

|

const crawler = crawlToCrawler(id, sc);

|

||||||

|

|

||||||

const sitemap = req.body.ignoreSitemap ? null : await crawler.tryGetSitemap();

|

|

||||||

|

|

||||||

if (sitemap !== null) {

|

|

||||||

sitemap.map((x) => {

|

|

||||||

links.push(x.url);

|

|

||||||

});

|

|

||||||

}

|

|

||||||

|

|

||||||

let urlWithoutWww = req.body.url.replace("www.", "");

|

let urlWithoutWww = req.body.url.replace("www.", "");

|

||||||

|

|

||||||

let mapUrl = req.body.search

|

let mapUrl = req.body.search

|

||||||

? `"${req.body.search}" site:${urlWithoutWww}`

|

? `"${req.body.search}" site:${urlWithoutWww}`

|

||||||

: `site:${req.body.url}`;

|

: `site:${req.body.url}`;

|

||||||

// www. seems to exclude subdomains in some cases

|

|

||||||

const mapResults = await fireEngineMap(mapUrl, {

|

const resultsPerPage = 100;

|

||||||

// limit to 100 results (beta)

|

const maxPages = Math.ceil(Math.min(MAX_FIRE_ENGINE_RESULTS, limit) / resultsPerPage);

|

||||||

numResults: Math.min(limit, 100),

|

|

||||||

});

|

const cacheKey = `fireEngineMap:${mapUrl}`;

|

||||||

|

const cachedResult = await redis.get(cacheKey);

|

||||||

|

|

||||||

|

let allResults: any[];

|

||||||

|

let pagePromises: Promise<any>[];

|

||||||

|

|

||||||

|

if (cachedResult) {

|

||||||

|

allResults = JSON.parse(cachedResult);

|

||||||

|

} else {

|

||||||

|

const fetchPage = async (page: number) => {

|

||||||

|

return fireEngineMap(mapUrl, {

|

||||||

|

numResults: resultsPerPage,

|

||||||

|

page: page,

|

||||||

|

});

|

||||||

|

};

|

||||||

|

|

||||||

|

pagePromises = Array.from({ length: maxPages }, (_, i) => fetchPage(i + 1));

|

||||||

|

allResults = await Promise.all(pagePromises);

|

||||||

|

|

||||||

|

await redis.set(cacheKey, JSON.stringify(allResults), "EX", 24 * 60 * 60); // Cache for 24 hours

|

||||||

|

}

|

||||||

|

|

||||||

|

// Parallelize sitemap fetch with serper search

|

||||||

|

const [sitemap, ...searchResults] = await Promise.all([

|

||||||

|

req.body.ignoreSitemap ? null : crawler.tryGetSitemap(),

|

||||||

|

...(cachedResult ? [] : pagePromises),

|

||||||

|

]);

|

||||||

|

|

||||||

|

if (!cachedResult) {

|

||||||

|

allResults = searchResults;

|

||||||

|

}

|

||||||

|

|

||||||

|

if (sitemap !== null) {

|

||||||

|

sitemap.forEach((x) => {

|

||||||

|

links.push(x.url);

|

||||||

|

});

|

||||||

|

}

|

||||||

|

|

||||||

|

let mapResults = allResults

|

||||||

|

.flat()

|

||||||

|

.filter((result) => result !== null && result !== undefined);

|

||||||

|

|

||||||

|

const minumumCutoff = Math.min(MAX_MAP_LIMIT, limit);

|

||||||

|

if (mapResults.length > minumumCutoff) {

|

||||||

|

mapResults = mapResults.slice(0, minumumCutoff);

|

||||||

|

}

|

||||||

|

|

||||||

if (mapResults.length > 0) {

|

if (mapResults.length > 0) {

|

||||||

if (req.body.search) {

|

if (req.body.search) {

|

||||||

|

|

@ -84,17 +127,19 @@ export async function mapController(

|

||||||

// Perform cosine similarity between the search query and the list of links

|

// Perform cosine similarity between the search query and the list of links

|

||||||

if (req.body.search) {

|

if (req.body.search) {

|

||||||

const searchQuery = req.body.search.toLowerCase();

|

const searchQuery = req.body.search.toLowerCase();

|

||||||

|

|

||||||

links = performCosineSimilarity(links, searchQuery);

|

links = performCosineSimilarity(links, searchQuery);

|

||||||

}

|

}

|

||||||

|

|

||||||

links = links.map((x) => {

|

links = links

|

||||||

try {

|

.map((x) => {

|

||||||

return checkAndUpdateURLForMap(x).url.trim()

|

try {

|

||||||

} catch (_) {

|

return checkAndUpdateURLForMap(x).url.trim();

|

||||||

return null;

|

} catch (_) {

|

||||||

}

|

return null;

|

||||||

}).filter(x => x !== null);

|

}

|

||||||

|

})

|

||||||

|

.filter((x) => x !== null);

|

||||||

|

|

||||||

// allows for subdomains to be included

|

// allows for subdomains to be included

|

||||||

links = links.filter((x) => isSameDomain(x, req.body.url));

|

links = links.filter((x) => isSameDomain(x, req.body.url));

|

||||||

|

|

@ -107,8 +152,10 @@ export async function mapController(

|

||||||

// remove duplicates that could be due to http/https or www

|

// remove duplicates that could be due to http/https or www

|

||||||

links = removeDuplicateUrls(links);

|

links = removeDuplicateUrls(links);

|

||||||

|

|

||||||

billTeam(req.auth.team_id, 1).catch(error => {

|

billTeam(req.auth.team_id, 1).catch((error) => {

|

||||||

Logger.error(`Failed to bill team ${req.auth.team_id} for 1 credit: ${error}`);

|

Logger.error(

|

||||||

|

`Failed to bill team ${req.auth.team_id} for 1 credit: ${error}`

|

||||||

|

);

|

||||||

// Optionally, you could notify an admin or add to a retry queue here

|

// Optionally, you could notify an admin or add to a retry queue here

|

||||||

});

|

});

|

||||||

|

|

||||||

|

|

@ -116,7 +163,7 @@ export async function mapController(

|

||||||

const timeTakenInSeconds = (endTime - startTime) / 1000;

|

const timeTakenInSeconds = (endTime - startTime) / 1000;

|

||||||

|

|

||||||

const linksToReturn = links.slice(0, limit);

|

const linksToReturn = links.slice(0, limit);

|

||||||

|

|

||||||

logJob({

|

logJob({

|

||||||

job_id: id,

|

job_id: id,

|

||||||

success: links.length > 0,

|

success: links.length > 0,

|

||||||

|

|

@ -140,3 +187,51 @@ export async function mapController(

|

||||||

scrape_id: req.body.origin?.includes("website") ? id : undefined,

|

scrape_id: req.body.origin?.includes("website") ? id : undefined,

|

||||||

});

|

});

|

||||||

}

|

}

|

||||||

|

|

||||||

|

// Subdomain sitemap url checking

|

||||||

|

|

||||||

|

// // For each result, check for subdomains, get their sitemaps and add them to the links

|

||||||

|

// const processedUrls = new Set();

|

||||||

|

// const processedSubdomains = new Set();

|

||||||

|

|

||||||

|

// for (const result of links) {

|

||||||

|

// let url;

|

||||||

|

// let hostParts;

|

||||||

|

// try {

|

||||||

|

// url = new URL(result);

|

||||||

|

// hostParts = url.hostname.split('.');

|

||||||

|

// } catch (e) {

|

||||||

|

// continue;

|

||||||

|

// }

|

||||||

|

|

||||||

|

// console.log("hostParts", hostParts);

|

||||||

|

// // Check if it's a subdomain (more than 2 parts, and not 'www')

|

||||||

|

// if (hostParts.length > 2 && hostParts[0] !== 'www') {

|

||||||

|

// const subdomain = hostParts[0];

|

||||||

|

// console.log("subdomain", subdomain);

|

||||||

|

// const subdomainUrl = `${url.protocol}//${subdomain}.${hostParts.slice(-2).join('.')}`;

|

||||||

|

// console.log("subdomainUrl", subdomainUrl);

|

||||||

|

|

||||||

|

// if (!processedSubdomains.has(subdomainUrl)) {

|

||||||

|

// processedSubdomains.add(subdomainUrl);

|

||||||

|

|

||||||

|

// const subdomainCrawl = crawlToCrawler(id, {

|

||||||

|

// originUrl: subdomainUrl,

|

||||||

|

// crawlerOptions: legacyCrawlerOptions(req.body),

|

||||||

|

// pageOptions: {},

|

||||||

|

// team_id: req.auth.team_id,

|

||||||

|

// createdAt: Date.now(),

|

||||||

|

// plan: req.auth.plan,

|

||||||

|

// });

|

||||||

|

// const subdomainSitemap = await subdomainCrawl.tryGetSitemap();

|

||||||

|

// if (subdomainSitemap) {

|

||||||

|

// subdomainSitemap.forEach((x) => {

|

||||||

|

// if (!processedUrls.has(x.url)) {

|

||||||

|

// processedUrls.add(x.url);

|

||||||

|

// links.push(x.url);

|

||||||

|

// }

|

||||||

|

// });

|

||||||

|

// }

|

||||||

|

// }

|

||||||

|

// }

|

||||||

|

// }

|

||||||

|

|

|

||||||

|

|

@ -36,17 +36,15 @@ export async function getLinksFromSitemap(

|

||||||

const root = parsed.urlset || parsed.sitemapindex;

|

const root = parsed.urlset || parsed.sitemapindex;

|

||||||

|

|

||||||

if (root && root.sitemap) {

|

if (root && root.sitemap) {

|

||||||

for (const sitemap of root.sitemap) {

|

const sitemapPromises = root.sitemap

|

||||||

if (sitemap.loc && sitemap.loc.length > 0) {

|

.filter(sitemap => sitemap.loc && sitemap.loc.length > 0)

|

||||||

await getLinksFromSitemap({ sitemapUrl: sitemap.loc[0], allUrls, mode });

|

.map(sitemap => getLinksFromSitemap({ sitemapUrl: sitemap.loc[0], allUrls, mode }));

|

||||||

}

|

await Promise.all(sitemapPromises);

|

||||||

}

|

|

||||||

} else if (root && root.url) {

|

} else if (root && root.url) {

|

||||||

for (const url of root.url) {

|

const validUrls = root.url

|

||||||

if (url.loc && url.loc.length > 0 && !WebCrawler.prototype.isFile(url.loc[0])) {

|

.filter(url => url.loc && url.loc.length > 0 && !WebCrawler.prototype.isFile(url.loc[0]))

|

||||||

allUrls.push(url.loc[0]);

|

.map(url => url.loc[0]);

|

||||||

}

|

allUrls.push(...validUrls);

|

||||||

}

|

|

||||||

}

|

}

|

||||||

} catch (error) {

|

} catch (error) {

|

||||||

Logger.debug(`Error processing sitemapUrl: ${sitemapUrl} | Error: ${error.message}`);

|

Logger.debug(`Error processing sitemapUrl: ${sitemapUrl} | Error: ${error.message}`);

|

||||||

|

|

|

||||||

|

|

@ -1,10 +1,14 @@

|

||||||

import axios from "axios";

|

import axios from "axios";

|

||||||

import dotenv from "dotenv";

|

import dotenv from "dotenv";

|

||||||

import { SearchResult } from "../../src/lib/entities";

|

import { SearchResult } from "../../src/lib/entities";

|

||||||

|

import * as Sentry from "@sentry/node";

|

||||||

|

import { Logger } from "../lib/logger";

|

||||||

|

|

||||||

dotenv.config();

|

dotenv.config();

|

||||||

|

|

||||||

export async function fireEngineMap(q: string, options: {

|

export async function fireEngineMap(

|

||||||

|

q: string,

|

||||||

|

options: {

|

||||||

tbs?: string;

|

tbs?: string;

|

||||||

filter?: string;

|

filter?: string;

|

||||||

lang?: string;

|

lang?: string;

|

||||||

|

|

@ -12,34 +16,43 @@ export async function fireEngineMap(q: string, options: {

|

||||||

location?: string;

|

location?: string;

|

||||||

numResults: number;

|

numResults: number;

|

||||||

page?: number;

|

page?: number;

|

||||||

}): Promise<SearchResult[]> {

|

|

||||||

let data = JSON.stringify({

|

|

||||||

query: q,

|

|

||||||

lang: options.lang,

|

|

||||||

country: options.country,

|

|

||||||

location: options.location,

|

|

||||||

tbs: options.tbs,

|

|

||||||

numResults: options.numResults,

|

|

||||||

page: options.page ?? 1,

|

|

||||||

});

|

|

||||||

|

|

||||||

if (!process.env.FIRE_ENGINE_BETA_URL) {

|

|

||||||

console.warn("(v1/map Beta) Results might differ from cloud offering currently.");

|

|

||||||

return [];

|

|

||||||

}

|

}

|

||||||

|

): Promise<SearchResult[]> {

|

||||||

|

try {

|

||||||

|

let data = JSON.stringify({

|

||||||

|

query: q,

|

||||||

|

lang: options.lang,

|

||||||

|

country: options.country,

|

||||||

|

location: options.location,

|

||||||

|

tbs: options.tbs,

|

||||||

|

numResults: options.numResults,

|

||||||

|

page: options.page ?? 1,

|

||||||

|

});

|

||||||

|

|

||||||

let config = {

|

if (!process.env.FIRE_ENGINE_BETA_URL) {

|

||||||

method: "POST",

|

console.warn(

|

||||||

url: `${process.env.FIRE_ENGINE_BETA_URL}/search`,

|

"(v1/map Beta) Results might differ from cloud offering currently."

|

||||||

headers: {

|

);

|

||||||

"Content-Type": "application/json",

|

return [];

|

||||||

},

|

}

|

||||||

data: data,

|

|

||||||

};

|

let config = {

|

||||||

const response = await axios(config);

|

method: "POST",

|

||||||

if (response && response) {

|

url: `${process.env.FIRE_ENGINE_BETA_URL}/search`,

|

||||||

return response.data

|

headers: {

|

||||||

} else {

|

"Content-Type": "application/json",

|

||||||

|

},

|

||||||

|

data: data,

|

||||||

|

};

|

||||||

|

const response = await axios(config);

|

||||||

|

if (response && response) {

|

||||||

|

return response.data;

|

||||||

|

} else {

|

||||||

|

return [];

|

||||||

|

}

|

||||||

|

} catch (error) {

|

||||||

|

Logger.error(error);

|

||||||

|

Sentry.captureException(error);

|

||||||

return [];

|

return [];

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

|

||||||

823

apps/api/v1-openapi.json

Normal file

823

apps/api/v1-openapi.json

Normal file

|

|

@ -0,0 +1,823 @@

|

||||||

|

{

|

||||||

|

"openapi": "3.0.0",

|

||||||

|

"info": {

|

||||||

|

"title": "Firecrawl API",

|

||||||

|

"version": "v1",

|

||||||

|

"description": "API for interacting with Firecrawl services to perform web scraping and crawling tasks.",

|

||||||

|

"contact": {

|

||||||

|

"name": "Firecrawl Support",

|

||||||

|

"url": "https://firecrawl.dev",

|

||||||

|

"email": "support@firecrawl.dev"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"servers": [

|

||||||

|

{

|

||||||

|

"url": "https://api.firecrawl.dev/v1"

|

||||||

|

}

|

||||||

|

],

|

||||||

|

"paths": {

|

||||||

|

"/scrape": {

|

||||||

|

"post": {

|

||||||

|

"summary": "Scrape a single URL and optionally extract information using an LLM",

|

||||||

|

"operationId": "scrapeAndExtractFromUrl",

|

||||||

|

"tags": ["Scraping"],

|

||||||

|

"security": [

|

||||||

|

{

|

||||||

|

"bearerAuth": []

|

||||||

|

}

|

||||||

|

],

|

||||||

|

"requestBody": {

|

||||||

|

"required": true,

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"url": {

|

||||||

|

"type": "string",

|

||||||

|

"format": "uri",

|

||||||

|

"description": "The URL to scrape"

|

||||||

|

},

|

||||||

|

"formats": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string",

|

||||||

|

"enum": ["markdown", "html", "rawHtml", "links", "screenshot", "extract", "screenshot@fullPage"]

|

||||||

|

},

|

||||||

|

"description": "Formats to include in the output.",

|

||||||

|

"default": ["markdown"]

|

||||||

|

},

|

||||||

|

"onlyMainContent": {

|

||||||

|

"type": "boolean",

|

||||||

|

"description": "Only return the main content of the page excluding headers, navs, footers, etc.",

|

||||||

|

"default": true

|

||||||

|

},

|

||||||

|

"includeTags": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"description": "Tags to include in the output."

|

||||||

|

},

|

||||||

|

"excludeTags": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"description": "Tags to exclude from the output."

|

||||||

|

},

|

||||||

|

"headers": {

|

||||||

|

"type": "object",

|

||||||

|

"description": "Headers to send with the request. Can be used to send cookies, user-agent, etc."

|

||||||

|

},

|

||||||

|

"waitFor": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "Specify a delay in milliseconds before fetching the content, allowing the page sufficient time to load.",

|

||||||

|

"default": 0

|

||||||

|

},

|

||||||

|

"timeout": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "Timeout in milliseconds for the request",

|

||||||

|

"default": 30000

|

||||||

|

},

|

||||||

|

"extract": {

|

||||||

|

"type": "object",

|

||||||

|

"description": "Extract object",

|

||||||

|

"properties": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"description": "The schema to use for the extraction (Optional)"

|

||||||

|

},

|

||||||

|

"systemPrompt": {

|

||||||

|

"type": "string",

|

||||||

|

"description": "The system prompt to use for the extraction (Optional)"

|

||||||

|

},

|

||||||

|

"prompt": {

|

||||||

|

"type": "string",

|

||||||

|

"description": "The prompt to use for the extraction without a schema (Optional)"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"required": ["url"]

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"responses": {

|

||||||

|

"200": {

|

||||||

|

"description": "Successful response",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"$ref": "#/components/schemas/ScrapeResponse"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"402": {

|

||||||

|

"description": "Payment required",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "Payment required to access this resource."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"429": {

|

||||||

|

"description": "Too many requests",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "Request rate limit exceeded. Please wait and try again later."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"500": {

|

||||||

|

"description": "Server error",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "An unexpected error occurred on the server."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"/crawl/{id}": {

|

||||||

|

"parameters": [

|

||||||

|

{

|

||||||

|

"name": "id",

|

||||||

|

"in": "path",

|

||||||

|

"description": "The ID of the crawl job",

|

||||||

|

"required": true,

|

||||||

|

"schema": {

|

||||||

|

"type": "string",

|

||||||

|

"format": "uuid"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

],

|

||||||

|

"get": {

|

||||||

|

"summary": "Get the status of a crawl job",

|

||||||

|

"operationId": "getCrawlStatus",

|

||||||

|

"tags": ["Crawling"],

|

||||||

|

"security": [

|

||||||

|

{

|

||||||

|

"bearerAuth": []

|

||||||

|

}

|

||||||

|

],

|

||||||

|

"responses": {

|

||||||

|

"200": {

|

||||||

|

"description": "Successful response",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"$ref": "#/components/schemas/CrawlStatusResponseObj"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"402": {

|

||||||

|

"description": "Payment required",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "Payment required to access this resource."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"429": {

|

||||||

|

"description": "Too many requests",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "Request rate limit exceeded. Please wait and try again later."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"500": {

|

||||||

|

"description": "Server error",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "An unexpected error occurred on the server."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"delete": {

|

||||||

|

"summary": "Cancel a crawl job",

|

||||||

|

"operationId": "cancelCrawl",

|

||||||

|

"tags": ["Crawling"],

|

||||||

|

"security": [

|

||||||

|

{

|

||||||

|

"bearerAuth": []

|

||||||

|

}

|

||||||

|

],

|

||||||

|

"responses": {

|

||||||

|

"200": {

|

||||||

|

"description": "Successful cancellation",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"success": {

|

||||||

|

"type": "boolean",

|

||||||

|

"example": true

|

||||||

|

},

|

||||||

|

"message": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "Crawl job successfully cancelled."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"404": {

|

||||||

|

"description": "Crawl job not found",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "Crawl job not found."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"500": {

|

||||||

|

"description": "Server error",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "An unexpected error occurred on the server."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"/crawl": {

|

||||||

|

"post": {

|

||||||

|

"summary": "Crawl multiple URLs based on options",

|

||||||

|

"operationId": "crawlUrls",

|

||||||

|

"tags": ["Crawling"],

|

||||||

|

"security": [

|

||||||

|

{

|

||||||

|

"bearerAuth": []

|

||||||

|

}

|

||||||

|

],

|

||||||

|

"requestBody": {

|

||||||

|

"required": true,

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"url": {

|

||||||

|

"type": "string",

|

||||||

|

"format": "uri",

|

||||||

|

"description": "The base URL to start crawling from"

|

||||||

|

},

|

||||||

|

"excludePaths": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"description": "URL patterns to exclude"

|

||||||

|

},

|

||||||

|

"includePaths": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"description": "URL patterns to include"

|

||||||

|

},

|

||||||

|

"maxDepth": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "Maximum depth to crawl relative to the entered URL.",

|

||||||

|

"default": 2

|

||||||

|

},

|

||||||

|

"ignoreSitemap": {

|

||||||

|

"type": "boolean",

|

||||||

|

"description": "Ignore the website sitemap when crawling",

|

||||||

|

"default": true

|

||||||

|

},

|

||||||

|

"limit": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "Maximum number of pages to crawl",

|

||||||

|

"default": 10

|

||||||

|

},

|

||||||

|

"allowBackwardLinks": {

|

||||||

|

"type": "boolean",

|

||||||

|

"description": "Enables the crawler to navigate from a specific URL to previously linked pages.",

|

||||||

|

"default": false

|

||||||

|

},

|

||||||

|

"allowExternalLinks": {

|

||||||

|

"type": "boolean",

|

||||||

|

"description": "Allows the crawler to follow links to external websites.",

|

||||||

|

"default": false

|

||||||

|

},

|

||||||

|

"webhook": {

|

||||||

|

"type": "string",

|

||||||

|

"description": "The URL to send the webhook to. This will trigger for crawl started (crawl.started) ,every page crawled (crawl.page) and when the crawl is completed (crawl.completed or crawl.failed). The response will be the same as the `/scrape` endpoint."

|

||||||

|

},

|

||||||

|

"scrapeOptions": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"formats": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string",

|

||||||

|

"enum": ["markdown", "html", "rawHtml", "links", "screenshot"]

|

||||||

|

},

|

||||||

|

"description": "Formats to include in the output.",

|

||||||

|

"default": ["markdown"]

|

||||||

|

},

|

||||||

|

"headers": {

|

||||||

|

"type": "object",

|

||||||

|

"description": "Headers to send with the request. Can be used to send cookies, user-agent, etc."

|

||||||

|

},

|

||||||

|

"includeTags": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"description": "Tags to include in the output."

|

||||||

|

},

|

||||||

|

"excludeTags": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"description": "Tags to exclude from the output."

|

||||||

|

},

|

||||||

|

"onlyMainContent": {

|

||||||

|

"type": "boolean",

|

||||||

|

"description": "Only return the main content of the page excluding headers, navs, footers, etc.",

|

||||||

|

"default": true

|

||||||

|

},

|

||||||

|

"waitFor": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "Wait x amount of milliseconds for the page to load to fetch content",

|

||||||

|

"default": 123

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"required": ["url"]

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"responses": {

|

||||||

|

"200": {

|

||||||

|

"description": "Successful response",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"$ref": "#/components/schemas/CrawlResponse"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"402": {

|

||||||

|

"description": "Payment required",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "Payment required to access this resource."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"429": {

|

||||||

|

"description": "Too many requests",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "Request rate limit exceeded. Please wait and try again later."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"500": {

|

||||||

|

"description": "Server error",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "An unexpected error occurred on the server."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"/map": {

|

||||||

|

"post": {

|

||||||

|

"summary": "Map multiple URLs based on options",

|

||||||

|

"operationId": "mapUrls",

|

||||||

|

"tags": ["Mapping"],

|

||||||

|

"security": [

|

||||||

|

{

|

||||||

|

"bearerAuth": []

|

||||||

|

}

|

||||||

|

],

|

||||||

|

"requestBody": {

|

||||||

|

"required": true,

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"url": {

|

||||||

|

"type": "string",

|

||||||

|

"format": "uri",

|

||||||

|

"description": "The base URL to start crawling from"

|

||||||

|

},

|

||||||

|

"search": {

|

||||||

|

"type": "string",

|

||||||

|

"description": "Search query to use for mapping. During the Alpha phase, the 'smart' part of the search functionality is limited to 100 search results. However, if map finds more results, there is no limit applied."

|

||||||

|

},

|

||||||

|

"ignoreSitemap": {

|

||||||

|

"type": "boolean",

|

||||||

|

"description": "Ignore the website sitemap when crawling",

|

||||||

|

"default": true

|

||||||

|

},

|

||||||

|

"includeSubdomains": {

|

||||||

|

"type": "boolean",

|

||||||

|

"description": "Include subdomains of the website",

|

||||||

|

"default": false

|

||||||

|

},

|

||||||

|

"limit": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "Maximum number of links to return",

|

||||||

|

"default": 5000,

|

||||||

|

"maximum": 5000

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"required": ["url"]

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"responses": {

|

||||||

|

"200": {

|

||||||

|

"description": "Successful response",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"$ref": "#/components/schemas/MapResponse"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"402": {

|

||||||

|

"description": "Payment required",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "Payment required to access this resource."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"429": {

|

||||||

|

"description": "Too many requests",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "Request rate limit exceeded. Please wait and try again later."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"500": {

|

||||||

|

"description": "Server error",

|

||||||

|

"content": {

|

||||||

|

"application/json": {

|

||||||

|

"schema": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"example": "An unexpected error occurred on the server."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"components": {

|

||||||

|

"securitySchemes": {

|

||||||

|

"bearerAuth": {

|

||||||

|

"type": "http",

|

||||||

|

"scheme": "bearer"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"schemas": {

|

||||||

|

"ScrapeResponse": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"success": {

|

||||||

|

"type": "boolean"

|

||||||

|

},

|

||||||

|

"data": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"markdown": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"html": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true,

|

||||||

|

"description": "HTML version of the content on page if `html` is in `formats`"

|

||||||

|

},

|

||||||

|

"rawHtml": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true,

|

||||||

|

"description": "Raw HTML content of the page if `rawHtml` is in `formats`"

|

||||||

|

},

|

||||||

|

"screenshot": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true,

|

||||||

|

"description": "Screenshot of the page if `screenshot` is in `formats`"

|

||||||

|

},

|

||||||

|

"links": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"description": "List of links on the page if `links` is in `formats`"

|

||||||

|

},

|

||||||

|

"metadata": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"title": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"description": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"language": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true

|

||||||

|

},

|

||||||

|

"sourceURL": {

|

||||||

|

"type": "string",

|

||||||

|

"format": "uri"

|

||||||

|

},

|

||||||

|

"<any other metadata> ": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"statusCode": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "The status code of the page"

|

||||||

|

},

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true,

|

||||||

|

"description": "The error message of the page"

|

||||||

|

}

|

||||||

|

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"llm_extraction": {

|

||||||

|

"type": "object",

|

||||||

|

"description": "Displayed when using LLM Extraction. Extracted data from the page following the schema defined.",

|

||||||

|

"nullable": true

|

||||||

|

},

|

||||||

|

"warning": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true,

|

||||||

|

"description": "Can be displayed when using LLM Extraction. Warning message will let you know any issues with the extraction."

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"CrawlStatusResponseObj": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"status": {

|

||||||

|

"type": "string",

|

||||||

|

"description": "The current status of the crawl. Can be `scraping`, `completed`, or `failed`."

|

||||||

|

},

|

||||||

|

"total": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "The total number of pages that were attempted to be crawled."

|

||||||

|

},

|

||||||

|

"completed": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "The number of pages that have been successfully crawled."

|

||||||

|

},

|

||||||

|

"creditsUsed": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "The number of credits used for the crawl."

|

||||||

|

},

|

||||||

|

"expiresAt": {

|

||||||

|

"type": "string",

|

||||||

|

"format": "date-time",

|

||||||

|

"description": "The date and time when the crawl will expire."

|

||||||

|

},

|

||||||

|

"next": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true,

|

||||||

|

"description": "The URL to retrieve the next 10MB of data. Returned if the crawl is not completed or if the response is larger than 10MB."

|

||||||

|

},

|

||||||

|

"data": {

|

||||||

|

"type": "array",

|

||||||

|

"description": "The data of the crawl.",

|

||||||

|

"items": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"markdown": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"html": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true,

|

||||||

|

"description": "HTML version of the content on page if `includeHtml` is true"

|

||||||

|

},

|

||||||

|

"rawHtml": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true,

|

||||||

|

"description": "Raw HTML content of the page if `includeRawHtml` is true"

|

||||||

|

},

|

||||||

|

"links": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"description": "List of links on the page if `includeLinks` is true"

|

||||||

|

},

|

||||||

|

"screenshot": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true,

|

||||||

|

"description": "Screenshot of the page if `includeScreenshot` is true"

|

||||||

|

},

|

||||||

|

"metadata": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"title": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"description": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"language": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true

|

||||||

|

},

|

||||||

|

"sourceURL": {

|

||||||

|

"type": "string",

|

||||||

|

"format": "uri"

|

||||||

|

},

|

||||||

|

"<any other metadata> ": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"statusCode": {

|

||||||

|

"type": "integer",

|

||||||

|

"description": "The status code of the page"

|

||||||

|

},

|

||||||

|

"error": {

|

||||||

|

"type": "string",

|

||||||

|

"nullable": true,

|

||||||

|

"description": "The error message of the page"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"CrawlResponse": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"success": {

|

||||||

|

"type": "boolean"

|

||||||

|

},

|

||||||

|

"id": {

|

||||||

|

"type": "string"

|

||||||

|

},

|

||||||

|

"url": {

|

||||||

|

"type": "string",

|

||||||

|

"format": "uri"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"MapResponse": {

|

||||||

|

"type": "object",

|

||||||

|

"properties": {

|

||||||

|

"success": {

|

||||||

|

"type": "boolean"

|

||||||

|

},

|

||||||

|

"links": {

|

||||||

|

"type": "array",

|

||||||

|

"items": {

|

||||||

|

"type": "string"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"security": [

|

||||||

|

{

|

||||||

|

"bearerAuth": []

|

||||||

|

}

|

||||||

|

]

|

||||||

|

}

|

||||||

|

|

@ -228,7 +228,7 @@ class FirecrawlApp:

|

||||||

json_data = {'url': url}

|

json_data = {'url': url}

|

||||||

if params:

|

if params:

|

||||||

json_data.update(params)

|

json_data.update(params)

|

||||||

|

|

||||||

# Make the POST request with the prepared headers and JSON data

|

# Make the POST request with the prepared headers and JSON data

|

||||||

response = requests.post(

|

response = requests.post(

|

||||||

f'{self.api_url}{endpoint}',

|

f'{self.api_url}{endpoint}',

|

||||||

|

|

@ -238,7 +238,7 @@ class FirecrawlApp:

|

||||||

if response.status_code == 200:

|

if response.status_code == 200:

|

||||||

response = response.json()

|

response = response.json()

|

||||||

if response['success'] and 'links' in response:

|

if response['success'] and 'links' in response:

|

||||||

return response['links']

|

return response

|

||||||

else:

|

else:

|

||||||

raise Exception(f'Failed to map URL. Error: {response["error"]}')

|

raise Exception(f'Failed to map URL. Error: {response["error"]}')

|

||||||

else:

|

else:

|

||||||

|

|

@ -434,4 +434,4 @@ class CrawlWatcher:

|

||||||

self.dispatch_event('document', doc)

|

self.dispatch_event('document', doc)

|

||||||

elif msg['type'] == 'document':

|

elif msg['type'] == 'document':

|

||||||

self.data.append(msg['data'])

|

self.data.append(msg['data'])

|

||||||

self.dispatch_event('document', msg['data'])

|

self.dispatch_event('document', msg['data'])

|

||||||

|

|

|

||||||

229

apps/rust-sdk/Cargo.lock

generated

229

apps/rust-sdk/Cargo.lock

generated

|

|

@ -26,6 +26,21 @@ dependencies = [

|

||||||

"memchr",

|

"memchr",

|

||||||

]

|

]

|